Our Read on Readers: 5 Insights for Content Marketers

The power of Q&As, headline rules, and other valuable lessons from a large-scale content analysis

Nearly 60% of marketers expect their content budgets to increase in 2023, according to a recent Wordpress report. But as companies continue placing big bets on editorial initiatives and thought leadership, few understand how to assess their program’s performance.

At Message Lab we’ve developed a content intelligence practice and a process that combines quantitative and qualitative data and enables us to evaluate a defined subset of articles and derive actionable insights. We can identify highest and lowest performers, recognize patterns that lay the foundation for testing, and form hypotheses that inform future editorial planning.

Content intelligence for content marketers

This type of analysis is especially fruitful when we can apply it to a large data set. So when one of our clients asked us to analyze the performance of a program that included more than a hundred articles, we jumped at the opportunity. Below are five lessons from that exercise that you can apply to your content program. These lessons also demonstrate the value that can be gleaned through our approach to content intelligence.

1. Q&As and roundtables are as engaging as executive bylines.

There’s a common misconception that the term “thought leadership” is synonymous with first-person bylines.

That’s no wonder. The job of a bylined story is to elevate the profile of the author (or the company the author works for) through expert insight and, well, leading thoughts. Well-written bylines, with a strong POV, do the job well: Data consistently shows that they are among the most read and talked-about articles of any content program.

But stopping there would be a mistake. Message Lab’s analysis of our client’s program showed that Q&As and roundtables, where multiple execs discuss the same topic, often result in similar — and in many cases better — time engagement than bylines.

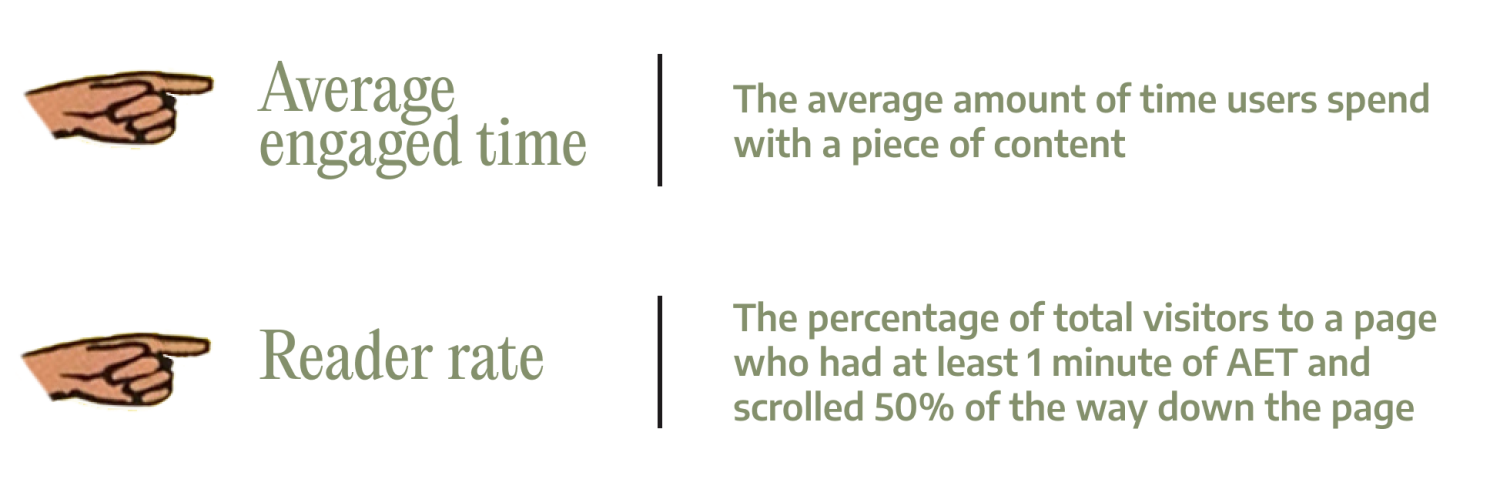

To arrive at this finding, we cross analyzed “average engaged time,” or AET, our proprietary metric for measuring the average amount of time users spend with a piece of content, by format. We also assessed each format against a custom metric we call reader rate: the percentage of total visitors to a page who had at least 1 minute of AET and scrolled 50% of the way down the page — a proxy we use to estimate the percentage of visitors who actually “read” a meaningful part of the article.

Our analysis yielded a surprising takeaway. Not only did roundtables and Q&As edge out bylines in engagement, but there was also less variability across the sample:

Roundtables – 2:07 AET

Q&As – 2:00 AET

Bylines – 1:57 AET

Q&As and roundtables also had a higher reader rate — both 25% (exceeding our cross-client baseline benchmark of 20%) — while bylines stalled at 17%. A recommendation for the client emerged: The content program could integrate more Q&As and roundtables into the editorial calendar moving forward, for which they could anticipate engagement at least on par with bylines.

Of course, editorial considerations and strategic caveats should be taken into account. In a roundtable, an exec ends up sharing the “stage” with peers, which can help elevate the conversation, but can be an issue for some. Q&As, for their part, not only bring a level of authenticity to an executive’s voice but also can be easier to execute than bylines. That’s especially important given that thought leaders tend to be folks whose time is precious and scarce, and it makes Q&As an attractive story format to produce at volume.

This same analysis yielded four additional insights, all of which led to either concrete recommendations or hypotheses for experimentation.

2. Short headlines draw readers in.

Of the 10 articles with the highest reader rates, 9 of them had a headline that was less than 40 characters. It was unclear why short headlines were enticing more readers, so we recommended further A/B testing that pitted short headlines vs. long headlines. The follow-up test corroborated the hypothesis: As a general rule, short headlines placed adjacent to a descriptive deck are more engaging than stories with long headlines and no deck, or long headlines and a deck.

3. In-line graphics are correlated with higher engagement.

In our study, pieces with at least one graphic element in the article (a chart, a pull-quote, an illustration or image) drove users deeper into the piece. By the numbers, 9 of the 10 top reader rate articles had a visual element, while only 2 of the 10 lowest did. While it’s been difficult to test at scale, we have since consistently found that articles with in-line images are more engaging than text-only articles.

4. Engagement coincided with the “accessibility” of the article.

All 10 of the 10 articles with the highest AET (and 8 of 10 with the highest reader rate) scored a 1 on a 1-to-3 scale, where 1 is most accessible and 3 is least accessible. We assessed accessibility qualitatively, looking at the extent to which jargon and other technical or insider language was used throughout the piece. (An article about hybrid work and culture, for example, would likely rate more accessible than one about app development in a multicloud environment.)

In contrast, 8 of the bottom 10 articles for reader rate were a 2 or 3 on the accessibility scale, with many tending to cover dense IT-specific topics with a niche appeal. Our recommendation to the client wasn’t to have fewer niche-appeal articles, as those narrowly targeted pieces often play an important role in a content program. We just advised that they space them better so that a newsletter edition or social feed wasn’t scaring off audiences with multiple dense pieces in a row.

5. Headlines that are more “straightforward” keep readers on the page.

In our analysis, 8 of 10 articles with the highest percentage of users who stayed longer than 15 seconds had headlines that clearly indicated what the article was going to be about. (A straightforward headline might read “Top workplace trends for 2023.”) Our hypothesis: Clear, direct headlines draw more highly engaged users because those who click know exactly what they are going to get. More provocative or abstract headlines may drive a user to click through, but the substance of the piece might not match their expectations.

Feeding the feedback loop

These findings demonstrate how insights from content analysis can inform future editorial planning, creating a feedback loop for optimizing your program over time.

Content intelligence is the digital marketer’s equivalent of lab testing. For companies with mature thought leadership programs publishing content at a consistent clip, it’s the best way to take advantage of the data that content is accruing, spot trends and use those findings to inform strategy.

About the author

Tyler Moss

Tyler Moss oversees program design for content and communications strategies purpose-built to reach and engage target audiences. Tyler’s team specializes in unearthing a brand’s unique perspective and translating it into narratives that resonate with readers and fill a void in the marketplace of ideas. He has led high-level strategy across a wide range of industries, including technology, nonprofit, higher education, venture capital, financial services, and architecture. Tyler’s background is in magazine writing and editing, with work published by Condé Nast Traveler, The Atlantic, New York, Outside, and others. He was previously editor-in-chief of Writer’s Digest magazine.